Plain English goes in. Code comes out. We tested if OpenAI can create message filters in Redpanda Console. Here's how it went.

OpenAI has been dominating the headlines lately with its revolutionary ChatGPT. People from all backgrounds—technical and non technical—have been using the OpenAI APIs to build everything from small tools to make their jobs easier, to entire websites from a single prompt.

As an engineer at Redpanda, I’m constantly looking for ways to simplify the way users interact with our products. So, I decided to run a quick experiment and explore the question: can AI make technical tools accessible to non-technical users? In this case, the tool was Redpanda Console.

Redpanda Console is an easy-to-use web UI with everything you need to manage your Redpanda or third-party Apache Kafka® clusters. It provides better visibility into your topics, and lets you easily mask data, manage consumer groups, and explore real-time data with time-travel debugging.

Now, let’s say you have a Kafka topic with a million messages, and you want to find all the addresses in a certain country. There’s just one problem: you need to know how to code. And even if you do, there are plenty of nuances to figure out, like how to write a full function and how to access key value headers.

Using OpenAI APIs, I developed a quick prototype that allows users to describe the message filter in plain English, then click a button and let AI generate the code. No programming needed.

Here’s a glimpse behind the scenes and a quick walk through the final outcome.

Why use OpenAI for message filtering in Kafka?

The short answer is that message filtering in Kafka is hard.

For starters, Kafka Messages are not like SQL where you have a known table structure. Kafka messages can be anything. Luckily, most of the time, they are JSON, but message filters are still an inherently complex task.

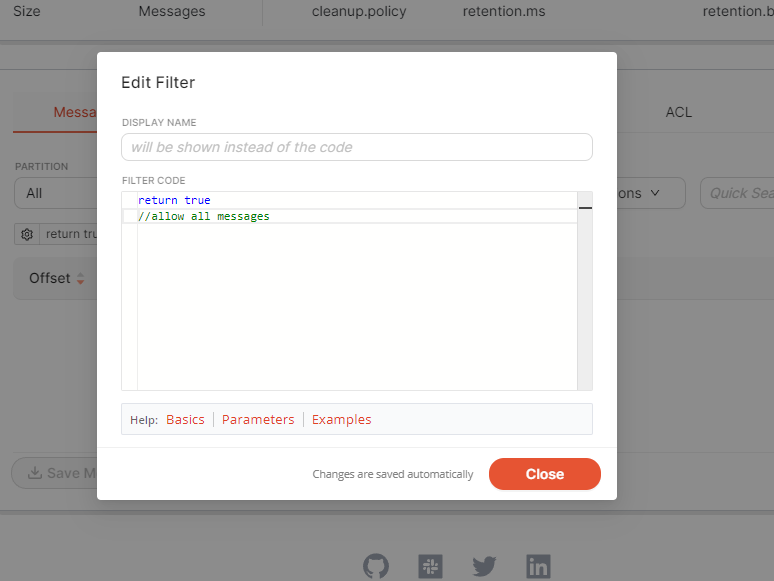

Next, if you’re a developer just getting started with Redpanda Console, you’ll be met with this filter tool:

Redpanda Console spares developers from wrangling SQL by allowing them to describe their message filter using TypeScript (a JavaScript language). But even if you’re well-versed in it, you’d still need to understand the API to figure out the right functions to use and how to access the messages. There’s always documentation, but learning something new always takes time.

For a non-technical user, they’d likely be lost even after reading the documentation.

So how can we make message filtering in Kafka easier? With OpenAI in the picture, I wondered if we could allow users to describe the message filter in plain English, then have AI do the coding for them. After pouring through the OpenAI documentation and experimenting with a couple of the GPT-3 models, I settled on “text-davinci-003” and started mapping out the prompts.

The OpenAI prompts: breaking down the logic

The prompt I used consists of three stages. The first defines the structure of the data and lets the AI know how to access the properties of the message that we’re looking for. This is purely for the proof of concept, and would be hidden from the user while the AI uses it behind the scenes.

type Address = { city: string, state: string };

type Message = { id: number, address: Address[], ageInYears: number };

function check(value: Message): boolean { }The second part is the actual prompt: the message filter description written in plain English. This is where OpenAI really showed its quality as it had to infer what the legal drinking age in America actually is, as well as connect that dots that by “CA” we were targeting users in North America.

Note that this is a roughly-written prompt that even a human might need to clarify, but to my surprise, it didn’t faze the AI. (That said, the dozen prompts I had written before reaching this one didn’t go down as well.)

The check method should match:

- Only American users above the legal age for drinking

- And only when their ID is below 1000, or their state is "CA"

Each line of code should be at most 40 characters in length.Lastly, we have the prompt clarification. Since I’m using the OpenAI API, specifically the text-davinci-003 model, and not the conversational ChatGPT interface. With this last prompt, I can define what I expect from the output. (This part would be hidden from the user in a production environment.)

So it would be:

function check(value: Message): boolean

The result: AI-generated message filters in just one click

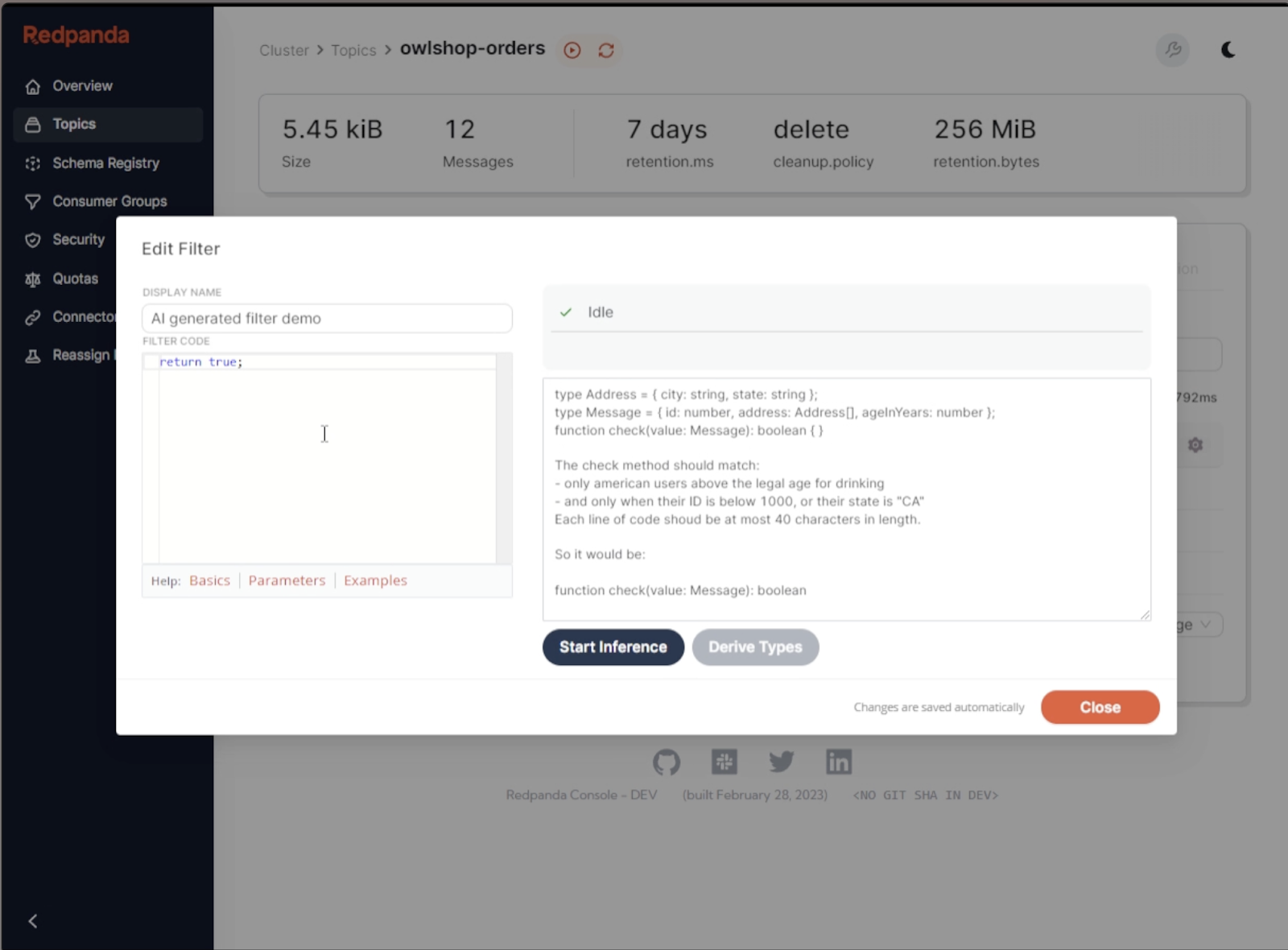

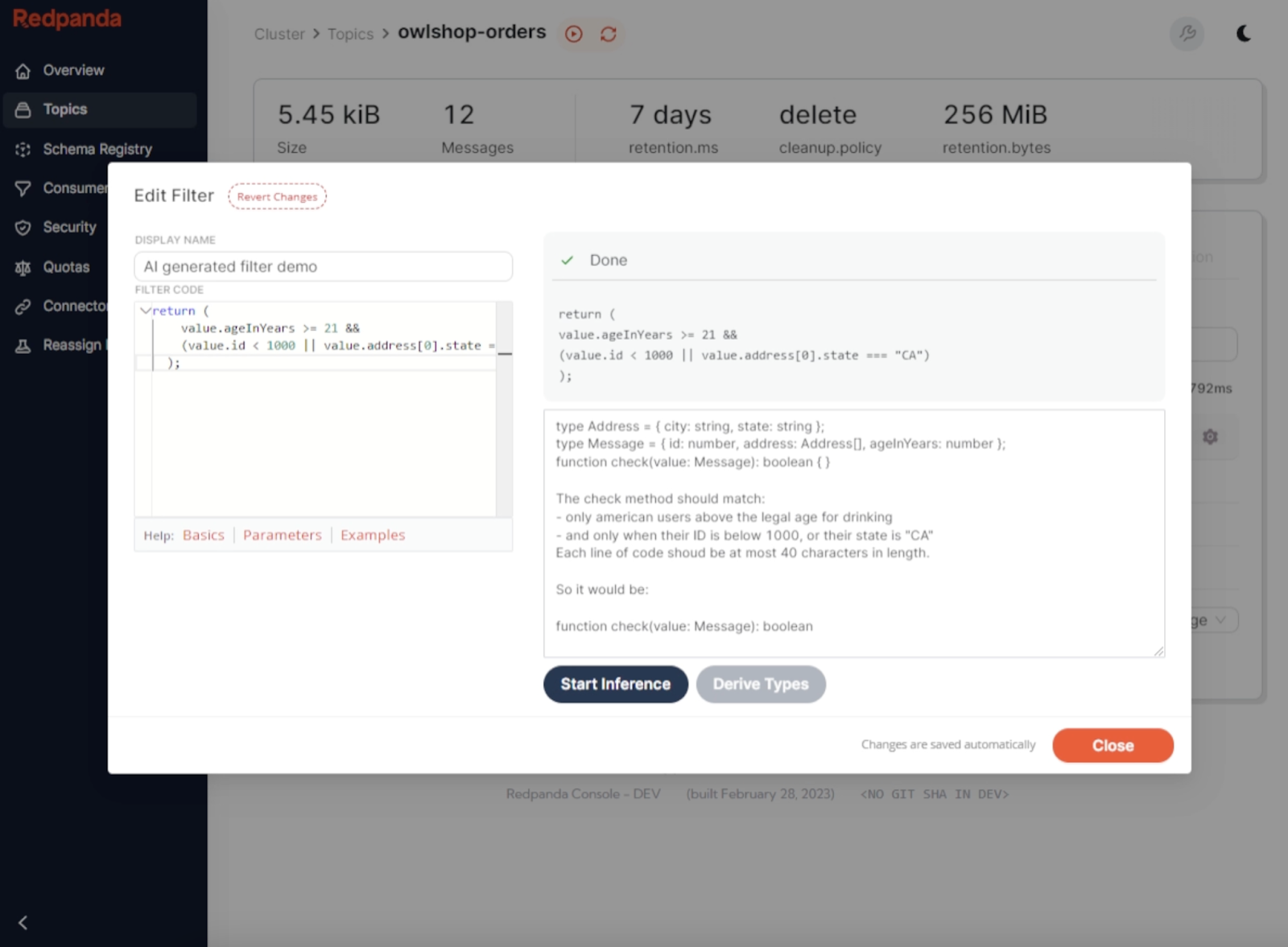

Now that you know the backstory, here’s how it would look on the user’s side.

On the left we have the usual box for the code, and on the right is where the user would write what kind of filter function they want the AI to translate into code. Once that plain English description is in, we can click the Start Inference button and allow the AI to generate the filter code.

The AI-generated code appears on the left side almost instantly, saving the user precious minutes (or potentially hours) of figuring it out themselves.

The challenges: OpenAI still has its limits

During this process, I found two main pain points: cost and reliability.

At the time of this experiment, the cost of OpenAI’s text-davinci-003 model was 0.01 cents per 1000 tokens. For reference, one token would be the equivalent of a single character (semi-colons, letters, quotes, parenthesise, etc.). It may not sound like much, but if you consider the short example in this post cost around 500 tokens, you can imagine what an enterprise-scale solution might rack up!

My next quibble is that this technology isn’t fool-proof. It follows the old saying “garbage in = garbage out”, where the average user can’t blindly trust the AI will give them the correct output. As it stands today, it’s tricky to safeguard against errors, misinterpretations, and even offensive language. Even the more advanced ChatGPT has a reputation for presenting answers that “sound credible” but are actually nonsensical on closer inspection.

In the case of Redpanda Console, for example, a user could easily write a prompt like “Please find all messages where...and then generate a PDF that I can download.” That sounds like a perfectly reasonable request—except our web UI doesn’t generate PDFs. I spent plenty of time tweaking the prompts for this proof of concept to prevent incorrect outputs, but we can’t expect non-coders to do this themselves without specialist knowledge.

Can AI be trained to handle bad requests and even suggest better ones? Certainly. Will it require a lot of work behind the scenes? Most likely.

The verdict: is OpenAI really the future of coding?

In the same way that AI art makes digital illustration possible for non-artists, AI-driven code can make software development possible for non-developers. The final results, however, still require well-planned prompts and narrow guardrails to get what you want. I’d argue that AI tools are still more like “autocorrect on steroids,” where the output is either scarily smart or confidently wrong.

Although with the ChatGPT API release, we’ve entered the “golden age” of experimentation and the ripple effect shouldn’t be underestimated. As a side note, the API is now 10x cheaper than existing GPT-3 models, and data submitted through the API is no longer used to train the models (unless developers opt in).

This blog post is just one small taste of how developers could potentially use AI to simplify the user experience. At the moment my little experiment is just a proof of concept, but if you’re interested in seeing this feature in production, let us know in our Redpanda Community on Slack!

To learn more about Redpanda, check out our documentation and browse the Redpanda blog for tutorials and free guides on how to easily integrate with Redpanda. For a more hands-on approach, go ahead and take Redpanda's free Community Edition for a spin.

Let's keep in touch

Subscribe and never miss another blog post, announcement, or community event. We hate spam and will never sell your contact information.