Your step-by-step guide on switching to an easy, cost-effective Kafka-compatible alternative

Moving to another streaming data platform is not a decision that’s made lightly, but it’s one many companies are now making to achieve higher throughput, minimal latency operations, and a significantly lower total cost of ownership.

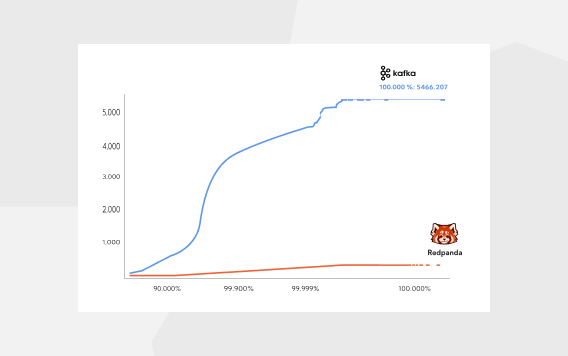

There are many flavors of Kafka implementations—a popular one being Amazon Managed Streaming for Apache Kafka (MSK), to handle their Kafka messaging traffic. While MSK is positioned as a relatively hands-off solution, some find that the performance and cost don't quite align with their expectations of fast, value-friendly Kafka implementations. The Kafka operational tax is real and people are looking for easier, simpler, and cost-effective ways to handle data flow.

Redpanda provides a seamless, drop-in replacement for MSK. We’ve already covered how to migrate from Kafka to Redpanda, where we gave a high-level overview of the moving process from a legacy Apache Kafka® environment into a modern, high-performance solution with Redpanda. In this post, we'll discuss a practical way of moving data out of MSK and into a Redpanda cluster that will work for our Dedicated, Bring Your Own Cloud (BYOC), or Self-hosted deployment models.

Note this is just one part of a comprehensive migration process that covers areas such as planning, data and application movement, functional validation, and scale testing. If you'd like to learn about the full scope, check out our free report detailing how to migrate from Kafka services to Redpanda.

Free report: migrating from Kafka to Redpanda

Get a detailed walkthrough, expert tips, and troubleshooting.

A quick stroll down migration lane

Migrating data, consumer offsets, and applications is straightforward. Our primary goal is to minimize the disruption to your application pipeline, especially to your producers and consumers.

Since Redpanda is Kafka API-compatible and a drop-in replacement, your applications shouldn’t require functional code changes. However, minor configuration changes are required for items such as the broker list.

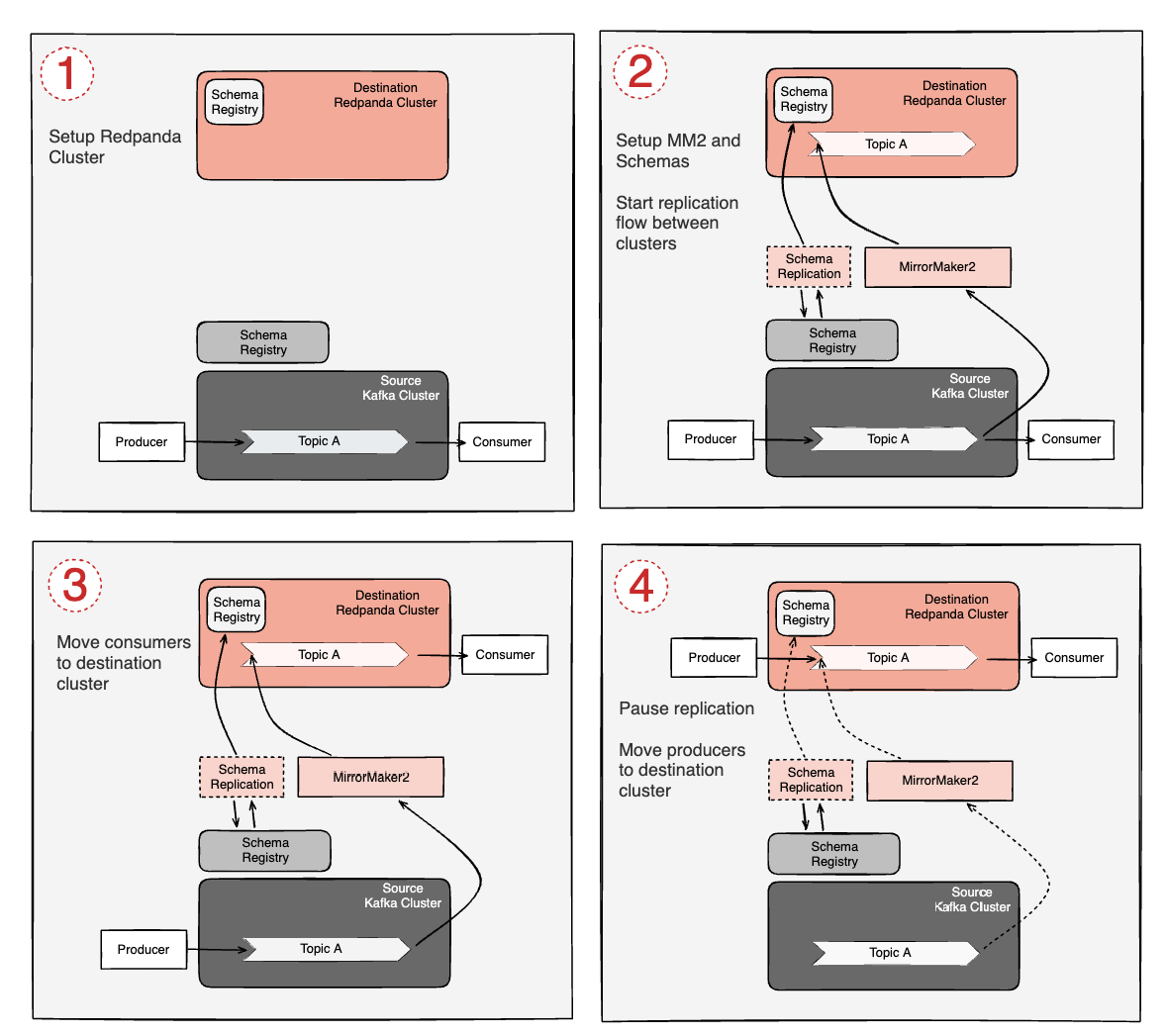

The diagram below illustrates the steps.

Set up a Redpanda cluster. For the best data transfer performance between MSK and Redpanda, try to minimize the network latency between the clusters.

Set up a node running MirrorMaker2 that can access both MSK and Redpanda clusters. Configure access policies and MirrorMaker2 properties file. Start copying data from MSK to Redpanda. Ensure consumers' offsets are being replicated and translated. Note: Producers and consumers will not be touched in this step.

Once the initial replication phase has caught up, shut off consumers on MSK, reconfigure them to point to Redpanda, and start them up. Note: Producers will continue publishing to MSK, Consumers will now read from Redpanda.

Stop Producers, allow MirrorMaker2 replication to catch up with any lagged message flow. Stop MirrorMaker2 to prevent accidental duplicate publishes. Reconfigure producers to point to Redpanda. Note: Producers and consumers are now connected directly to Redpanda.

Once the producers and consumers flip to Redpanda, confirm they’re operating correctly, and then safely shut down and remove the MSK cluster.

We’ll mainly focus on blocks #1 and #2 in the diagram, providing practical direction on how to prepare the Redpanda and MSK environments, as well as configuring MirrorMaker2 for doing the initial and ongoing replication.

1. AWS prep work and caveats

Amazon MSK uses client Identity and Access Management (IAM) roles to give a user, application, or AWS instance access to the topic data in the MSK cluster. These roles are attached to a policy defining what the role is allowed to do. Since MirrorMaker2 must authenticate to the MSK cluster, you need to create appropriate user credentials via these roles. This allows MirrorMaker2 to connect to the MSK cluster and access the topic data (or metadata).

For this tutorial, we’ll assume an MSK cluster is already running and configured for using SASL_SSL with the AWS_MSK_IAM SASL mechanism. MirrorMaker2 will run from a client machine that's separate from both the MSK and Redpanda clusters. This ensures MirrorMaker2 has sufficient resources for your migration. (Review our migration report for more information on MirrorMaker2 sizing.)

1.1 Configure AWS security group rules

In many deployments, Amazon MSK and Redpanda are configured in separate VPCs and with separate Security Group rules. You need to configure Security Group rules to allow the MirrorMaker2 client machine to access both clusters.

See the Redpanda deployment guide to understand what network ports you need.

1.2 Configure IAM permissions

Review the AWS documentation for creating an IAM role for use with MSK. This also walks you through creating the access policy.

The policy example provided below requires a correctly configured Amazon Resource Name (ARN) that defines the MSK cluster. Update the region, Account-ID, and cluster/Examplename strings with the ones for your AWS Region, AWS Account, and MSK cluster name. You can find the cluster name in the MSK console.

You may need additional policies defined in your environment, depending on what other IAM roles and policies are in effect. The following allows MirrorMaker2 to replicate from a freshly launched MSK cluster.

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"kafka-cluster:Connect",

"kafka-cluster:AlterCluster",

"kafka-cluster:DescribeCluster"

],

"Resource": [

"arn:aws:kafka:region:Account-ID:cluster/Examplename/*"

]

},

{

"Effect": "Allow",

"Action": [

"kafka-cluster:*Topic*",

"kafka-cluster:WriteData",

"kafka-cluster:ReadData"

],

"Resource": [

"arn:aws:kafka:region:Account-ID:topic/Examplename/*"

]

},

{

"Effect": "Allow",

"Action": [

"kafka-cluster:AlterGroup",

"kafka-cluster:DescribeGroup"

],

"Resource": [

"arn:aws:kafka:region:Account-ID:group/Examplename/*"

]

}

]

}

Once this is created, you should have a new role and policy defined in AWS. In the next section, we’ll attach that role to the client machine where MirrorMaker2 runs.

1.3 The MirrorMaker2 client machine

Once you define the IAM role and configure the policy, you can then attach the role to the client machine instance. This will allow Java clients like MirrorMaker2 to connect and authenticate from this machine.

Amazon provides a custom Java JAR for enabling a Java Kafka client to authenticate to MSK. This must be installed separately, and configured as shown below. Because of the JAR installation requirement, we’ll use the standalone MirrorMaker2 process, rather than launching via Kafka Connect.

$ wget https://downloads.apache.org/kafka/3.6.0/kafka_2.13-3.6.0.tgz

$ tar zxf kafka_2.13-3.6.0.tgz

$ sudo apt-get install openjdk-11-jdk

$ cd kafka_2.13-3.6.0/libs

$ wget https://github.com/aws/aws-msk-iam-auth/releases/download/v1.1.1/aws-msk-iam-auth-1.1.1-all.jarThis installation procedure loads the AWS_MSK_IAM SASL handler into MirrorMaker2 for use in configuring authentication details. For more information on this authentication mechanism, review the Amazon MSK IAM Access Control documentation.

2. Deploying Redpanda

You can deploy Redpanda with our Cloud control plane if you're using a Dedicated or BYOC instance. If using Self-hosted on Kubernetes, check out our helm charts and operator. If you opt to deploy straight to a Debian or Red Hat-based system, check out our deployment automation framework based on Ansible.

More details on setup can be found in our Self-hosted or Cloud deployment documentation.

3. Setting up MirrorMaker2

Now that we're ready to configure MirrorMaker2, it's time to create the mm2.properties file. This file should be placed inside the kafka_2.13-3.6.0 directory created during the MirrorMaker2 Client Machine setup section earlier in this post.

This configuration file enables several connectors.

MirrorHeartbeatConnector evaluates the liveness of the replication flow and detects when there may be connectivity issues or insufficient bandwidth.

MirrorCheckpointConnector handles consumer offsets between the two clusters.

MirrorSourceConnector handles the heavy lifting of topic-level data replication.

Setting the topics property allows you to pick and choose which topics get migrated across. This can take a regular expression to match some or all topics. Additionally, you can set explicit topics here if you want to limit it to just a handful.

You'll note that we explicitly disable topic ACL syncing. We recommend handling ACLs outside the scope of MirrorMaker2, as it tends to handle these in unexpected ways.

// name

name = msk-to-rp

// prevent re-encoding of data

key.converter = org.apache.kafka.connect.converters.ByteArrayConverter

value.converter = org.apache.kafka.connect.converters.ByteArrayConverter

// cluster names

clusters = msk, redpanda

//source.cluster.alias = ""

//target.cluster.alias = ""

// Bootstrap details

msk.bootstrap.servers = msk-1.example.com:9098,msk-2.example.com:9098,msk-3.example.com:9098

redpanda.bootstrap.servers = rp-1.example.com:9092,rp-2.example.com:9092,rp-3.example.com:9092

// make sure replication goes from msk to redpanda

msk->redpanda.enabled = true

// make sure there's no replication the other direction

redpanda->msk.enabled = false

topics = mirror-test

groups = .*

replication.factor = 3

//Make sure that your target cluster can accept larger message sizes. For example, 30MB messages

redpanda.producer.max.request.size=31457280

//Make sure topics are created without a cluster name prefix

replication.policy.class=org.apache.kafka.connect.mirror.IdentityReplicationPolicy

// make sure group syncing is working for offset translation

msk->redpanda.sync.group.offsets.enabled = true

// emit offset checkpoints more frequently. checkpoint emitting should be on by default, but let's be explicit

emit.checkpoints.enabled = true

emit.checkpoints.interval.seconds = 10

// configure the heartbeat/checkpoint/offset syncs replication factor.

checkpoints.topic.replication.factor=3

heartbeats.topic.replication.factor=3

offset-syncs.topic.replication.factor=3

// disable acl syncing.

sync.topic.acls.enabled = false

// the amount of parallelized transfers occurring at once. Do not exceed the

// total number of partitions. In dense clusters with many partitions, you may

// need to make this an even divisor of partition count so as not to overwhelm

// the mirrormaker2 client machine's resources

tasks.max=10

// necessary for configuring against an IAM-managed msk cluster.

msk.security.protocol=SASL_SSL

msk.sasl.mechanism=AWS_MSK_IAM

msk.sasl.jaas.config=software.amazon.msk.auth.iam.IAMLoginModule required;

msk.sasl.client.callback.handler.class=software.amazon.msk.auth.iam.IAMClientCallbackHandler

// always go back to earliest offset to ensure no gaps occur in case of error

// note: this can make mm2 publish duplicates to the destination in error scenario

auto.offset.reset=earliest

4. Start MirrorMaker2

On the MirrorMaker2 client machine, run this command from the Kafka bin/ directory:

$ ./connect-mirror-maker.sh mm2.propertiesOnce the process fires up, you should see multiple connectors start up in the logs, including MirrorHeartbeatConnector, MirrorSourceConnector, and MirrorCheckpointConnector.

Offset translation caveats

In most deployments, it's necessary to do consumer group offset translation to allow consumers to seamlessly move from one cluster to the other and pick up where they left off. The MirrorCheckpointConnector is critical for this process. If it's correctly working, you should see log messages emitted from connect-mirror-maker.sh that look something like the following:

[2023-10-26 04:50:56,044] INFO [MirrorCheckpointConnector|worker] Found 1 consumer groups for msk->redpanda. 1 are new. 0 were removed. Previously had 0. (org.apache.kafka.connect.mirror.MirrorCheckpointConnector:174)

23:01

[2023-10-26 05:00:55,935] INFO [MirrorCheckpointConnector|worker] refreshing consumer groups took 726 ms (org.apache.kafka.connect.mirror.Scheduler:95)

[2023-10-26 05:00:56,086] INFO [MirrorCheckpointConnector|task-0|offsets] WorkerSourceTask{id=MirrorCheckpointConnector-0} Committing offsets for 1 acknowledged messages (org.apache.kafka.connect.runtime.WorkerSourceTask:233)

23:02

and

[2023-10-26 04:50:57,263] INFO [MirrorCheckpointConnector|task-0] task-thread-MirrorCheckpointConnector-0 checkpointing 1 consumer groups msk->redpanda: [kafka-test]. (org.apache.kafka.connect.mirror.MirrorCheckpointTask:114)

You may need to tune the emit.checkpoints.interval up or down depending on your overall load or frequency of updates. High-volume clusters with many offset updates may require increasing this.

Evaluating performance and lag

By default, MirrorMaker2 will emit status metrics via Java JMX. If you don't have a JMX query mechanism, you can emit Prometheus metrics using the jmx_exporter from the Prometheus project. Ingest these metrics using a standard Prometheus metrics scraper and visualize in Grafana.

We recommend using the jmx_exporter Java Agent method. You can supply the additional arguments to connect-mirror-maker.sh via setting the EXTRA_ARGS environment variable before running the command. The project README has more details on configuring the exporter.

Some final tips

MirrorMaker2 is an asynchronous replication mechanism, which means that in high-volume publishing systems, your Redpanda environment could experience delays in seeing messages from when they were originally published in MSK.

It’s essential to ensure that not only is your MirrorMaker2 client node sized correctly to keep up with the publishing rate of messages going into MSK, but that the client machine also has sufficient capacity to publish those messages into Redpanda.

For example, if you're publishing 1GBytes/second into MSK, then your client machine needs to handle at least 2GBytes/sec of network traffic – 1GByte/sec to consume from MSK and 1GByte/sec to publish into Redpanda.

Additionally, sizing tasks.max appropriately is somewhat of an art. You need to experiment with your particular environment during the initial data migration. Too small, and you may not get enough parallelization occurring to copy your partitions on time. Too large, and you end up wasting resources or experiencing process and scheduling contention.

For more tips, read our full migration report to learn what else might affect how your data migration runs.

Free report: migrating from Kafka to Redpanda

Get a detailed walkthrough, expert tips, and troubleshooting.

Wrapping up

Moving platforms doesn't have to be scary, and it's important to show people how to easily and safely get out of their legacy platforms. We’ve helped several customers successfully migrate out of Amazon MSK and into Redpanda, enabling them to experience a better, faster, and more cost-effective Kafka platform.

If you have questions about how to do this in your own environment, contact our team or join the Redpanda Community on Slack to chat with our engineers. You can also get a good preview of what the migration process involves with our on-demand Streamcast and Masterclass.

Wherever you are in your migration journey, we're happy to guide you along the way.

Let's keep in touch

Subscribe and never miss another blog post, announcement, or community event. We hate spam and will never sell your contact information.