A friendly introduction to real-time analytics and how to get started

Data drives innovation—but only if you can capture and analyze the data before it loses relevance. In today's fast-paced world where information is generated at an unprecedented rate, the ability to process and analyze data in real time has gone from a “nice to have” to an absolute “must-have.”

In a nutshell, real-time analytics offer a clear, immediate picture of your data so you can act and react in an instant. For example, global powerhouse Amazon uses real-time analytics to deliver personalized shopping experiences. Capital One instantly determines creditworthiness by analyzing economic indicators and customer information, while gaming giant Fortnite leverages real-time analytics for in-game event tracking and refining the player's experience.

From cybersecurity to IoT, the applications for real-time analytics are endless. And, according to a 2023-2024 state of streaming data report, real-time analytics is one of the driving factors for organizations adopting streaming data in the first place! However, they still struggle to analyze data fast enough to act on it before it grows stale and loses value.

If you’re just stepping into this crucial practice, consider this post your 101 on real-time analytics. We’ll explain what it is, some common challenges, popular use cases, and the best tools to get you on the right track.

Ready? Let’s start with the basics.

What is real-time analytics?

Real-time analytics is the process of analyzing data as soon as it’s available so users can obtain insights and take action in real time. This is highly beneficial—and sometimes crucial—to industries that rely on split-second decisions based on changing circumstances. This can be anything from predicting market changes and detecting payment fraud to personalizing ads and recommending products at exactly the right moment.

In short, real-time analytics is like having a superpower in an overly competitive business landscape. With swifter decision-making, organizations can adapt to trends and changes as they happen to boost operational efficiency and customer satisfaction. And, instead of waiting for bad stuff to happen – like fraud, breakdowns, and errors – organizations can save time and money by predicting and preventing it altogether.

What are popular real-time analytics use cases?

So where can real-time analytics be applied? The answer: Everywhere! Here's a quick rundown of some noteworthy use cases across different industries:

Gaming – Game developers use real-time analytics to monitor player behavior, engagement, and performance while the game is being played. Developers can gain valuable insights into player preferences, identify gameplay issues, and make data-driven decisions to enhance the gaming experience.

Finance – Real-time fraud detection, stock market fluctuations, and money laundering are made transparent with the help of real-time analytics. Financial institutions can monitor market movements, swiftly adapt strategies to maximize profitability, promptly identify trading opportunities, swiftly assess portfolio risks, and promptly limit financial losses. For instance, Capital One uses real-time data analytics to analyze real-time credit data, economic indicators, and customer information to evaluate creditworthiness in real time and dynamically adjust credit limits or pricing.

eCommerce – From personalized product recommendations to instant shopping trend analysis, real-time analytics can supercharge digital shopping. By analyzing and identifying patterns in user browsing behavior, purchase history, and real-time contextual data, e-commerce platforms can offer tailored instant recommendations that enhance the user experience, drive engagement, and increase sales.

AdTech – Real-time bidding and instant ad optimization are transforming the world of digital advertising. Real-time analytics allows instant targeting decisions that consider the most up-to-date user information and maximize the chances of engagement and conversion. For example, Criteo, an adtech company that specializes in personalized advertising, analyzes an audience’s real-time behavior and characteristics to deliver targeted ads to relevant user segments. This helps improve ad relevance, engagement, and conversion rates.

Security – Real-time analytics enables immediate alerts and automated responses to potential security breaches, helping protect networks and systems from unauthorized access. Video surveillance systems also analyze live video feeds in real time and detect suspicious behavior, unauthorized access, or potential security incidents.

Supply chain – By analyzing incoming orders, sales data, customer demand, and supply chain information, organizations can dynamically update inventory levels, trigger reordering processes, and provide accurate product availability information to customers. For example, Amazon can adjust inventory levels in real time to ensure there’s enough stock while minimizing excess inventory, improving operational efficiency and reducing costs.

IoT – With countless devices and sensors generating data, real-time analytics ensure these insights are instantly translated into actions for everything from smart homes to autonomous vehicles. It’s also highly valuable when paired with AI to identify patterns and make intelligent decisions in various domains, such as predictive insights, anomaly detection, and automated control systems.

What are the main challenges of real-time analytics

Despite the undeniable benefits and valuable use cases, real-time analytics is an incredibly demanding field. You need powerful infrastructure to process these vast amounts of data in real time. Not to mention it can also be extremely expensive. Here are a few potential roadblocks to plan around:

Too many data sources, not enough insight. Many real-time analytics use cases see data pulled from sources like IoT sensors, media outlets, and applications. The collected data often has different formats, differing accuracy, and flaws. Plan accordingly, so you can secure quality data for your analysis.

Prepare for the data flood. You need a robust and scalable architecture that can handle many data sources sharing data in real time. There’s no point in analyzing real-time data for dynamic decision-making if your infrastructure struggles to deliver the insights you need when you need them.

Systems are often too slow. Traditional systems usually can’t keep up with the data-intensive requirements for accurate and timely real-time analytics. If you’re using batch processing, for example, which follows a basic extract, transform, and load (ETL) process, then there’s a considerable delay between when data is collected and when insights are derived.

The speed at which real-time data is processed can sometimes lead to accuracy trade-offs. And it’s no use having data in real-time if it’s wrong and leads you to wrong decisions. But with the right tools, these challenges can be managed effectively, which leads us to our next point.

What are the most popular real-time analytics tools?

Time to acquaint yourself with the array of tools at your disposal: data streaming platforms, stream processors, and serving layers.

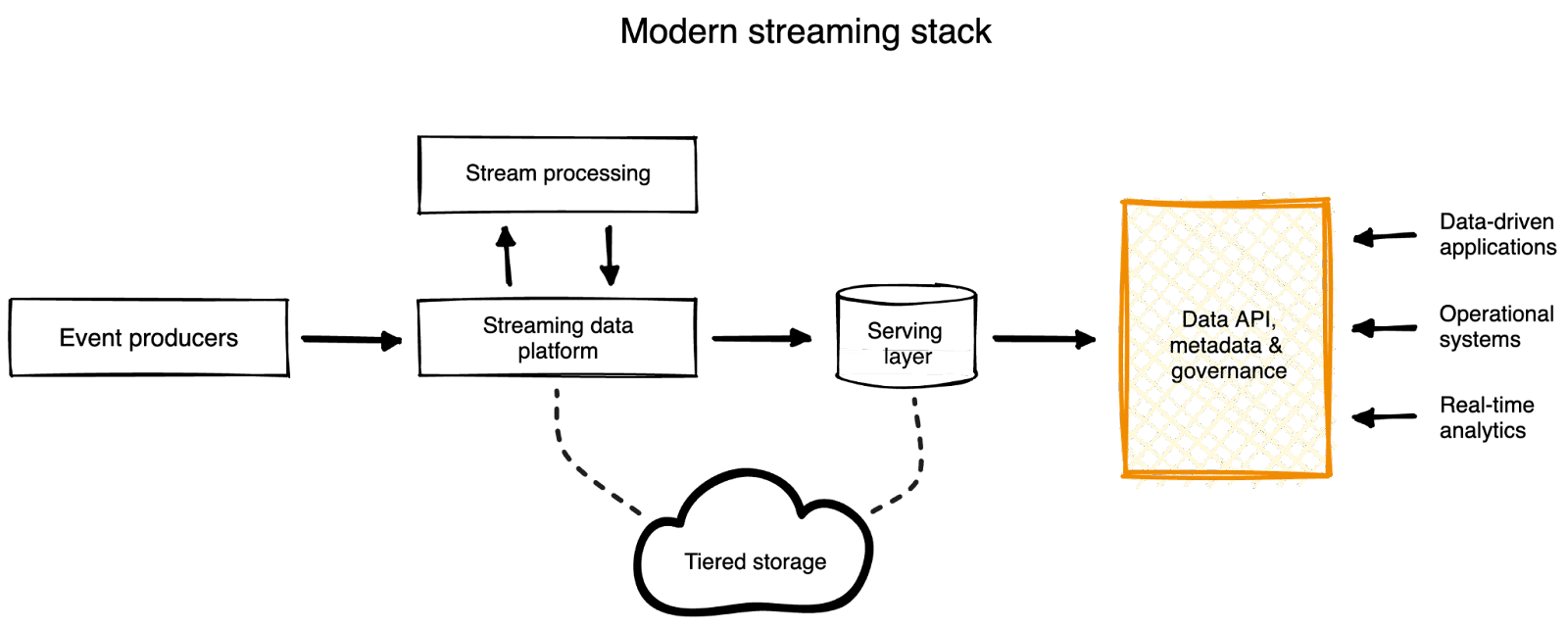

We explained the role of event producers, stream processing, and streaming data platforms in our blog on what is streaming data. In this post, we’ll cover their role in real-time analytics and look closer at the tiered storage, serving layer, and analytics consumers.

Event producers are devices and platforms that register and share events. In a real-time gaming use case, it can be a PC, PlayStation, or any other gaming platform. It sends gaming events to our next element.

Streaming data platforms are the linchpins of any real-time data architecture. These platforms provide high-throughput, low-latency processing capabilities to handle massive, fast-moving data streams. We need them to help bridge the gap between data producers (such as sensors, apps, servers) and data consumers (like databases and analytics platforms). Apache Kafka® and its simpler, less costly counterpart—Redpanda—are true juggernauts in this area.

Stream processors are systems that can process and analyze incoming data streams in real time. They perform complex computations and analytics on the fly, so we get instant insights from continuously streaming data. Tools like Apache Flink®, Apache Spark™, and other stream processors enable businesses to respond in real time to emerging trends, anomalies, or patterns in their data and can drive immediate decision-making and action. With the right storage and serving layer, that is.

Tiered storage is a key part of the modern streaming stack. This approach helps you keep costs down and speed up. With tiered storage, you define the data critical to your real-time analytics. This is then stored in the most accessible way. All other data can be stored, where the balance between cloud storage costs and accessibility fit your needs. Tiered storage solutions include our own Shadow Indexing and StarTree’s tiered storage for Apache Pinot™.

The serving layer tools are real-time online analytical processing (OLAP) databases like Pinot, Apache Druid, and ClickHouse. They store and query large data volumes, so real-time data can be accessed and processed by other tools.

Analytics consumers are user-facing dashboards, “headless” Business Intelligence software, and pretty much anything that can consume the analytics from the serving layer via an API. They can also be carbon-based lifeforms like data scientists and analysts who want to run queries on the collected analytics at their leisure.

Turbocharge real-time analytics with Redpanda

The ability to process and analyze data as soon as it arrives enables organizations to unlock real-time insights, optimize operations, improve customer experiences, and sharpen their competitive edge. However, with the increasing amount of data flowing in from distributed sources, you need a highly robust and scalable streaming data infrastructure to keep up. Sadly, legacy streaming data platforms like Kafka have long fallen behind today’s growing throughput demands and are famous for unsustainable costs and complexity.

Enter Redpanda— a simple, powerful, and cost-efficient streaming data platform that effortlessly handles large volumes of data so you can perform complex analytics and supercharge decision-making with real-time data. Plus, it acts as a drop-in Kafka replacement and works seamlessly with Kafka’s entire ecosystem.

In short, Redpanda is:

Simple: Deployed as a single, independent, self-contained binary to provide a streamlined experience for developers and admins alike. No ZooKeeper. No JVM.

Powerful: Engineered in C++ with a thread-per-core architecture – 10x faster than Kafka and ready to support data-intensive workloads with low latency.

Cost-effective: Designed to be hardware-efficient so it consumes 3x lower compute resources on average, reducing the total cost of streaming data by 6x.

Reliable: Jepsen-verified for data safety, uses Raft architecture and cloud-first storage to ensure zero data loss, and guarantees high availability at scale. It also gives you complete data sovereignty, even in the cloud.

To get started, you can find Redpanda Community Edition on GitHub or try Redpanda Cloud for free. Then go ahead and dive into the Redpanda blog for examples, step-by-step tutorials, and real-world customer stories.

Resources

Congratulations! You now know a whole lot more about real-time analytics data than you did when you started. If you have questions, ask away in the Redpanda Community on Slack. If you prefer to learn on your own, dig into these resources:

Let's keep in touch

Subscribe and never miss another blog post, announcement, or community event. We hate spam and will never sell your contact information.